Andrew Zola

In recent years, object detection and segmentation have accelerated significantly. Today, smart algorithms can find and classify countless individual objects within a video or an image. Although it was incredibly difficult for machines to do, it’s now part of our daily existence.

Both object detection and segmentation are powered by Artificial Intelligence (AI), Machine Learning (ML), and Deep Learning (DL). In this scenario, convolutional neural networks can locate and identify the class each item belongs to within an image.

It has also evolved to be much more than an intelligent algorithm that can recognize objects in photographs stored in a database. It can now find and classify objects in real-time to enable things like self-driving autonomous vehicles and more.

Object detection is a broad term that describes a collection of computer vision-related tasks that involve detecting, recognizing, localizing, and classifying objects within multiple visual instances like photographs and videos.

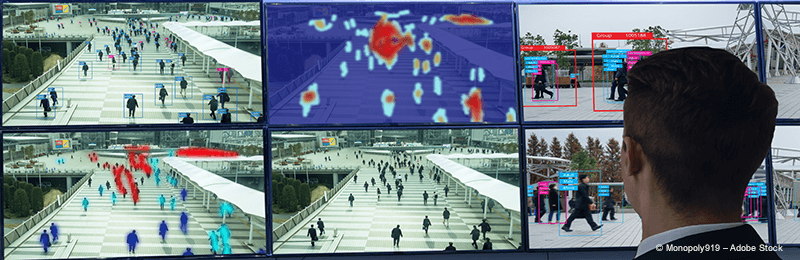

There’s also a variant of object detection called person detection. In this case, we utilize object detection to determine the primary class a “person” in images and video frames belongs to. As such, person detection is a vital part of modern video surveillance systems and face recognition technologies.

Object segmentation in this context describes the process of determining the areas and boundaries of an object within an image. We, humans, do this all the time without being conscious of it.

However, it remains a difficult hurdle to overcome for machines that navigate the world. In fact, segmentation remains the most challenging type of classification task.

There are two types of segmentation:

Generally, for practical reasons, the output from segmentation networks is often represented by coloring pixels.

The image classification part involves predicting the object’s class or determining if a particular object is present within an image. Object detection takes image classification to the next level by adding localization.

Localization involves determining the location of the object within an image and segmenting it. Once found, the algorithms draw a boundary box around the object. Whenever you do this, it provides a better understanding of the object as a whole. Without it, we’ll just have basic object classification.

Object detection and segmentation are important because they provide the foundation for most computer vision-driven tasks. These include image captioning, image segmentation, and object tracking.

Object detection is dual-natured and can classify objects within an image while also determining their positions It’s also highly accurate, reliable, and efficient at real-time object detection.

There are plenty of smart algorithms you can train to identify objects and people. These are the top eight algorithms dominating the industry:

Tip:

Order suitable training data sets, such as images and videos, to perfectly train your object detection systems, via clickworker.

Whether it’s video dataset harvesting or photo dataset harvesting,

our Clickworkers are available to build extensive datasets for your next project.

Region-based Convolutional Neural Networks or R-CNN was proposed in 2014 to resolve the problem of selecting a large number of regions at once. R-CNN allows us to perform selective searches to extract no more than 2000 regions from an image known as region proposals. This approach enables algorithms to work with these 2000 regions instead of classifying an enormous number of regions.

A year later, Fast R-CNN was introduced to combine both region selection and feature extraction into one machine learning model. In this case, when Fast R-CNN gets an image and a set of Rols, it returns a list of classes and bounding boxes of objects detected within the image.

The key innovation here was the addition of the RoI pooling layer. The Rol pooling layer takes CNN feature maps and regions of interest in an image and matches them with corresponding features for each region. This enabled the extraction of features for all the regions of interest quickly. Before Fast R-CNN, R-CNN processed each region separately and was time-intensive.

As Fast R-CNN still demanded the extraction of regions within an image and inputted into the model, it fell short of real-time object detection. As a result, Faster R-CNN was introduced in 2016 to solve this problem.

Faster R-CNN, unlike its predecessors, takes an image as input. It then processes it and returns a list of object classes along with their corresponding bounding boxes. With region detection added into the main neural network architecture, we can now achieve near-real-time object detection with Faster R-CNN.

R-FCN is a fully convolutional image classifier and detects regions in full scale, sharing almost all computation on the entire image. It’s efficient and highly accurate compared to Fast/Faster R-CNN that leverages per-region subnetworks hundreds of times. In contrast, R-FCN uses only the latest residual networks to detect objects in images.

HOG counts the number of gradient orientation occurrences in localized portions of an image. This approach helps predict the probability of containment of an object in a frame.

Similar to the slang (You Only Live Once), YOLO stands for “You Only Look Once.” Although the R-CNN model family is generally more accurate at object detection, YOLO is much faster at realizing object detection in real-time.

In this scenario, a single neural network takes an image as an input and predicts the bounding boxes and related class labels for each bounding box. Although this technique has low predictive accuracy, the tradeoff is that it can process up to 155 frames a second. Now that’s mind-blowing and fast!

This makes YOLO suitable for performing object detection at video streaming framerates or any application that demands real-time inference.

SSD can detect multiple objects within an image with only a single shot. This makes SSD much faster than two-shot R-CNN-based approaches.

The convolutional neural architecture SSP-Net uses spatial pyramid pooling to eliminate the fixed-size constraint of the network. This means that CNN won’t need a fixed-size input image anymore. It allows variable size input images to CNN to be classified.

However, regardless of the algorithm or model you use, you need extensive data sets for training. If you want your smart machines to successfully navigate a world full of objects and people, you must train them more to help them get better at mimicking humans.

Object detection and segmentation still have a long way to go. However, the good news is that we’re getting close to achieving total autonomy.

Andrew Zola